今天说说安装这两玩意 并且把能遇到的坑填了

首先就是先下载 hadoop 3.3.6

hbase 2.5.5

hadoop

hbase

winutils-3.3.6

- 其他版本有很大差异 这里不展开说

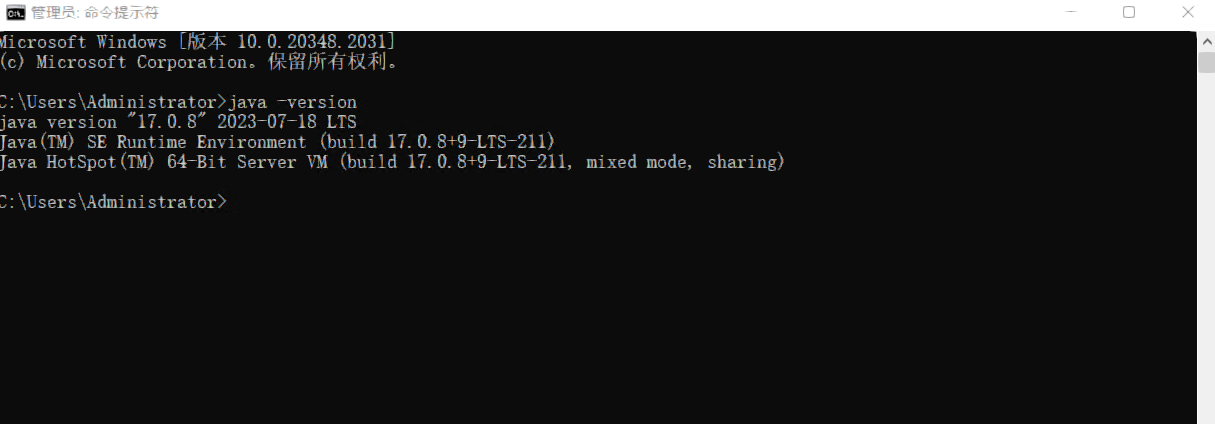

java环境 我用的是 17

另外 jdk 环境 我就不说了 百度一大把-.-

解压刚才 hadoop 和 hbase 文件

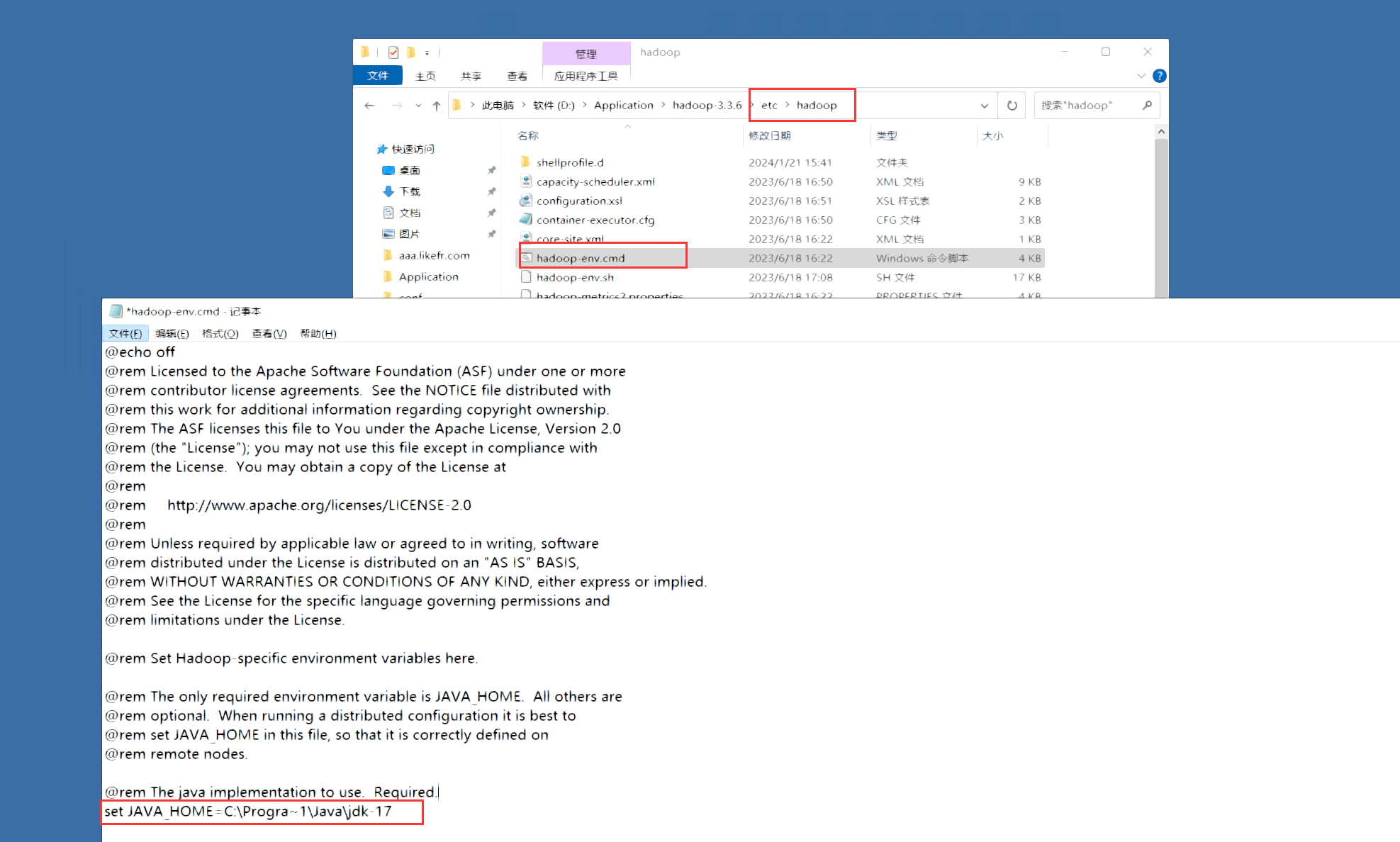

然后先修改hdaoop的 一些配置 路径 在 etc/hadoop 文件夹下的 配置

Progra~1 表示的是 Program Files 由于 不能使用空格 所以改成 Progra~1

改完后保存

C:\Progra~1\Java\jdk-17接着解压 winutils 把 hadoop.dll 和 winutils.exe 放入 hadoop bin 目录下

和 C:\Windows\System32 目录下 也放一份

然后重启电脑

hdfs-site.xml 自行修改路径

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!-- 这个参数设置为1,因为是单机版hadoop -->

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<!-- 记得要创建此目录 -->

<value>/D:/Application/hadoop-3.3.6/temp/data/hdfs/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<!-- 记得创建此目录 -->

<value>/D:/Application/hadoop-3.3.6/temp/data/hdfs/datanode</value>

</property>

<property>

<name>dfs.permissions</name>

<!-- 以便在网页中可以创建、上传文件 -->

<value>false</value>

</property>

</configuration>

core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

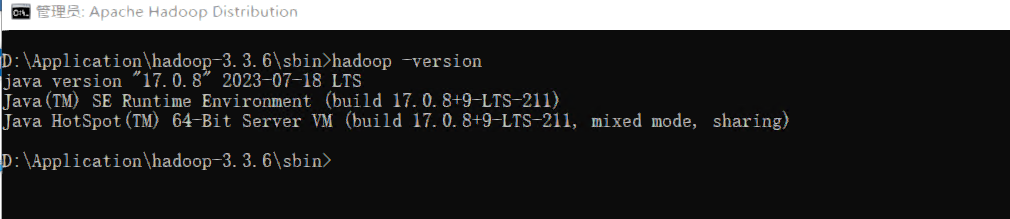

验证 Hadoop 安装效果

然后在命令行中 , 执行 hadoop -version

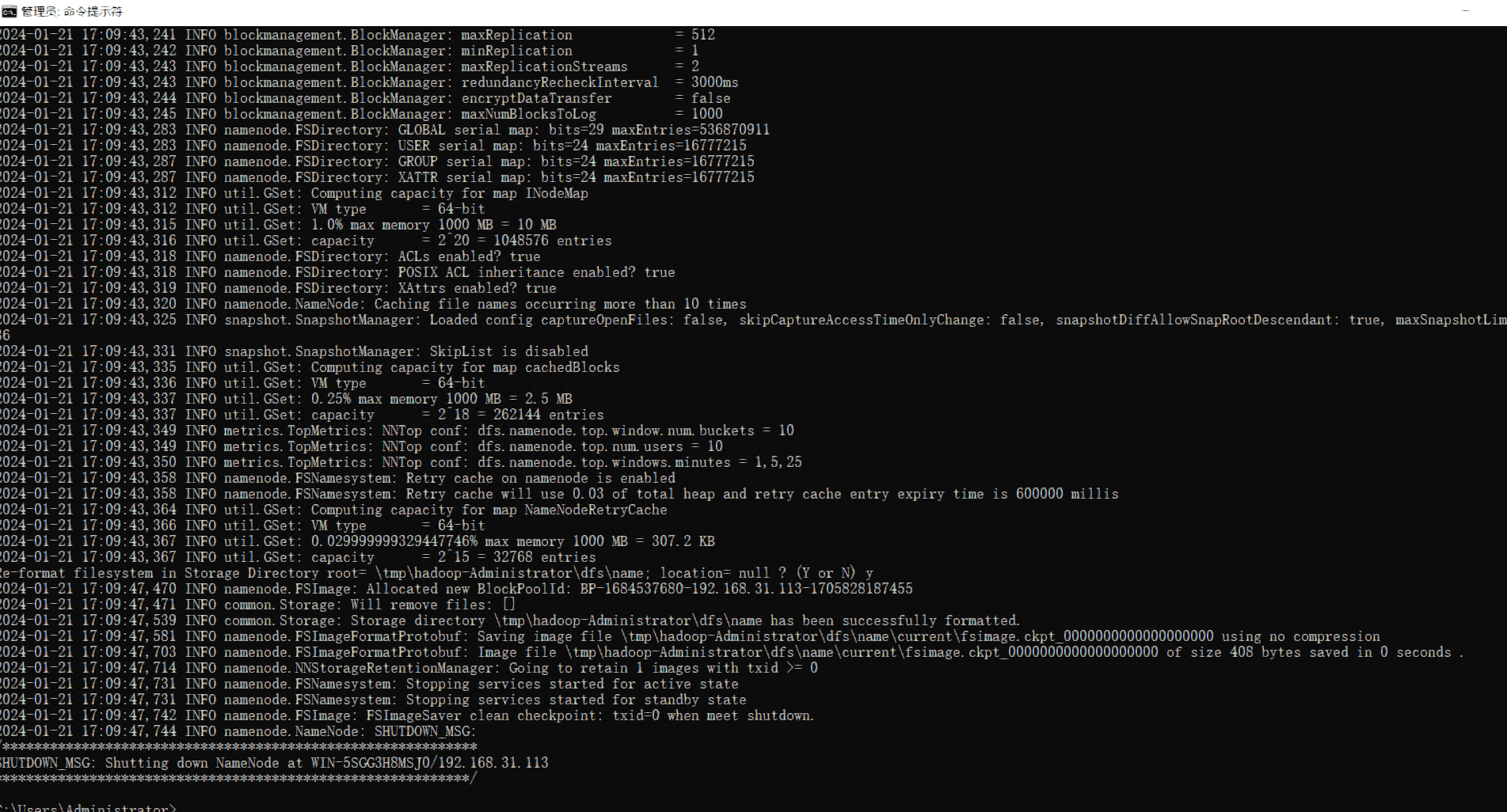

接着 初始化hadoop

hdfs namenode -format可能需要输入 Y 来覆盖

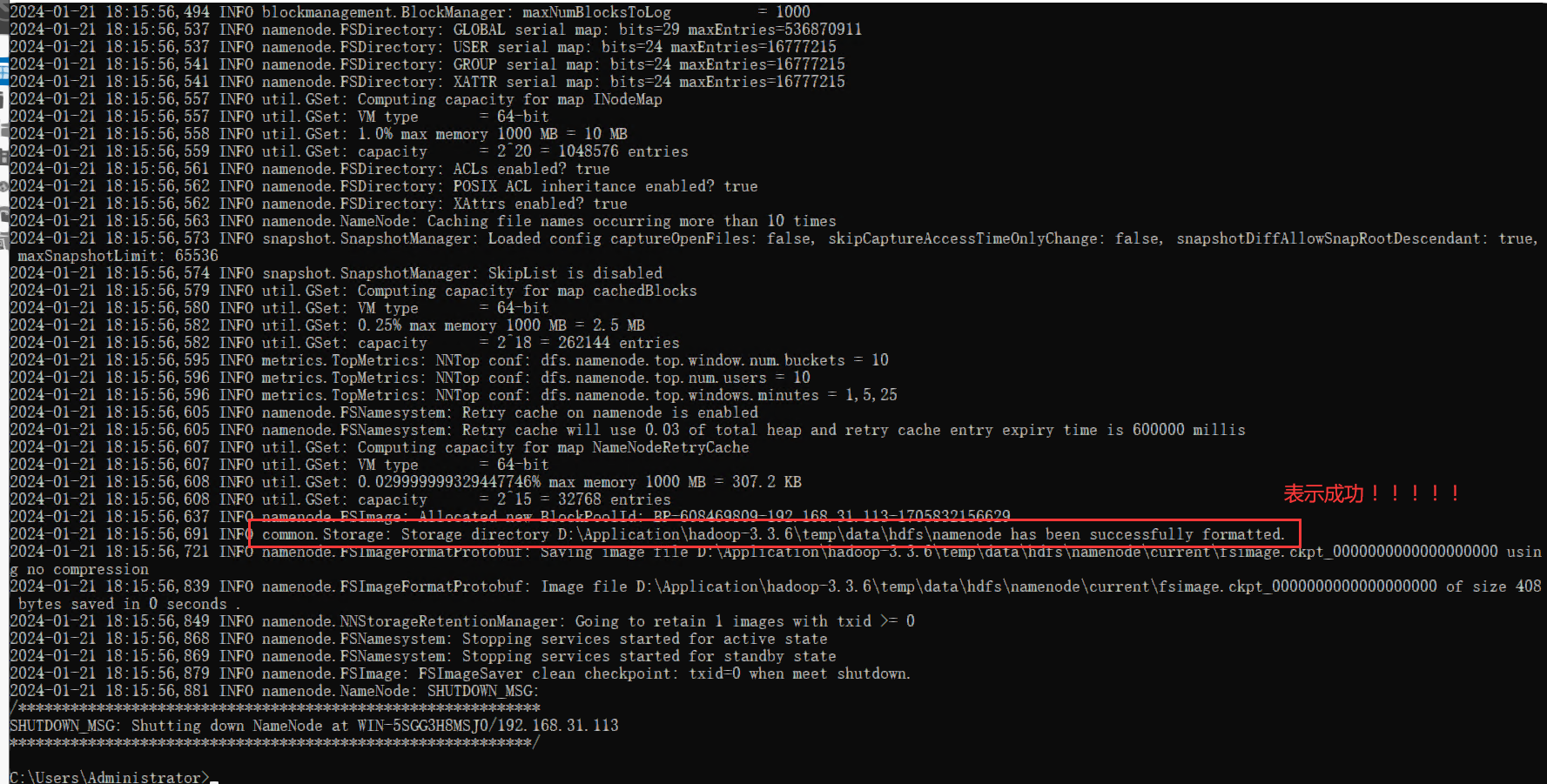

如图 就成功了!

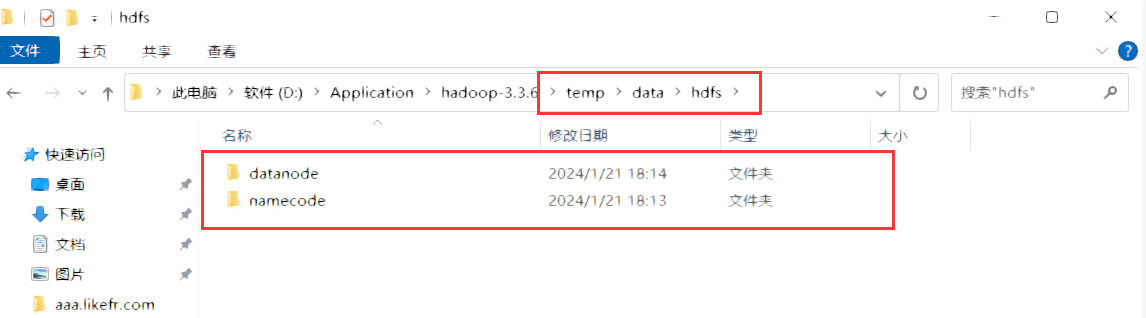

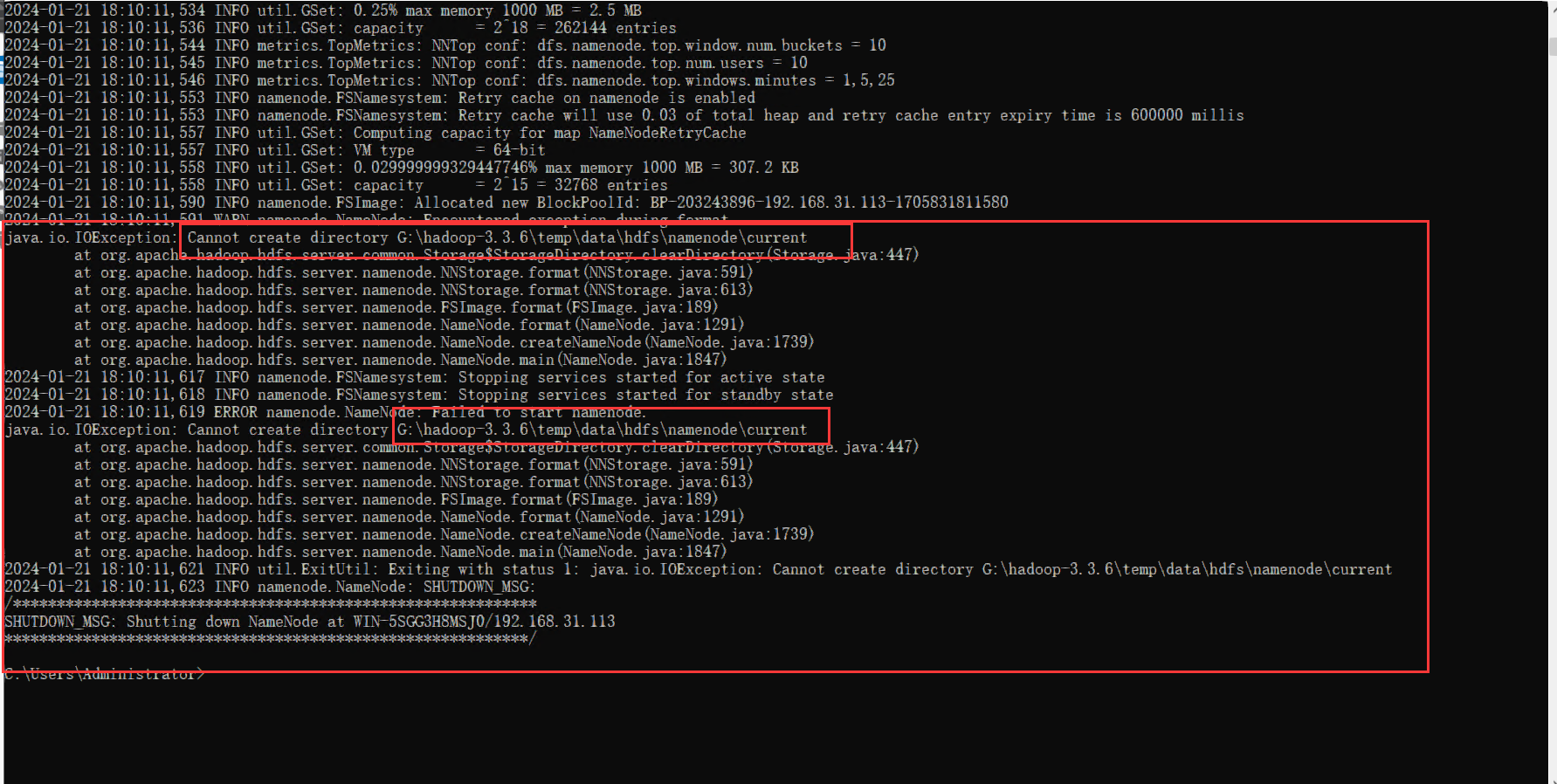

如果没有成功 抛出如下异常 表示 需要自行创建文件夹!!

接下来 hbase

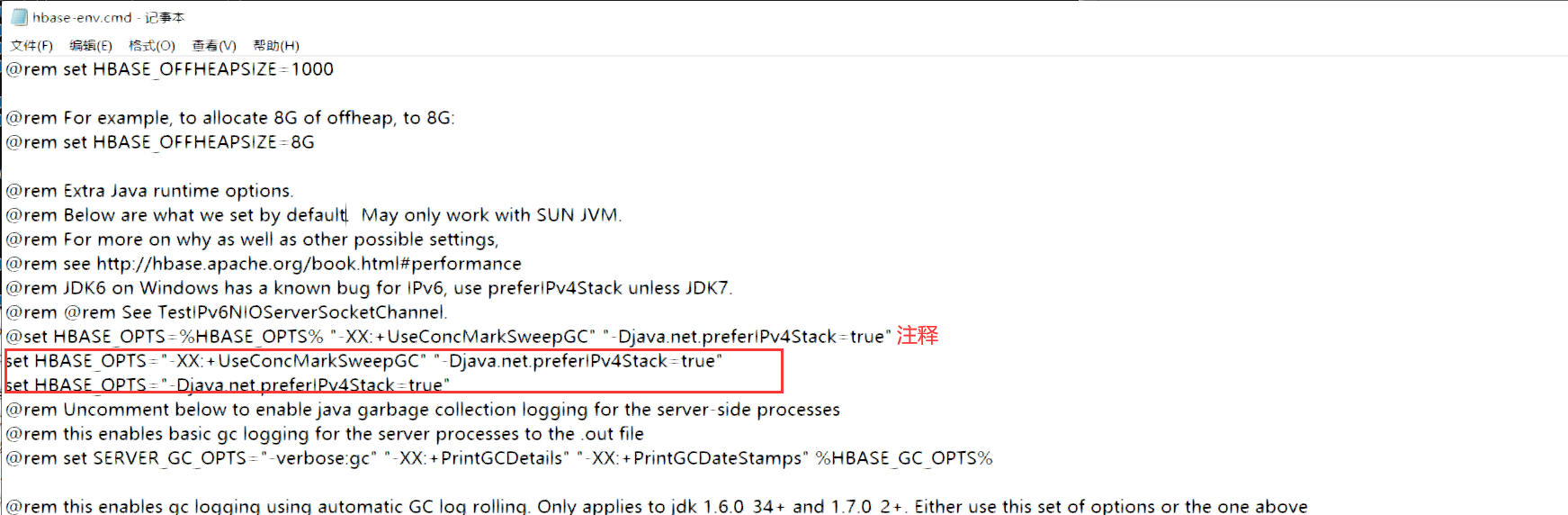

打开conf 文件 hbase-env.cmd

export JAVA_HOME=改成和hadoop一样

@ 符号记得删除

rem set JAVA_HOME=C:\Progra~1\Java\jdk-17

rem set HBASE_MANAGES_ZK=false如图增加

set HBASE_OPTS="-XX:+UseConcMarkSweepGC" "-Djava.net.preferIPv4Stack=true"

set HBASE_OPTS="-Djava.net.preferIPv4Stack=true"之所以需要哦增加 上面两行 是因为CMS垃圾收集器在JDK 15中被移除,因此UseConcMarkSweepGC也被移除

JEP 363: Remove the Concurrent Mark Sweep GC

所以,要么切换回java 8或11,要么修改以上两行 改完后 保存即可

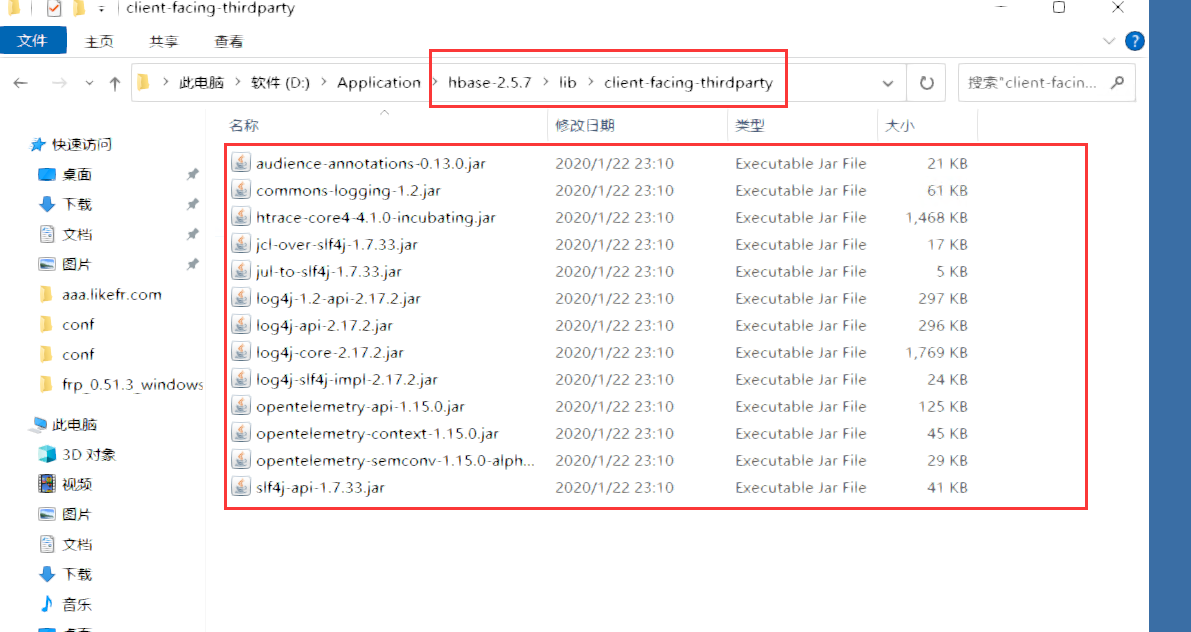

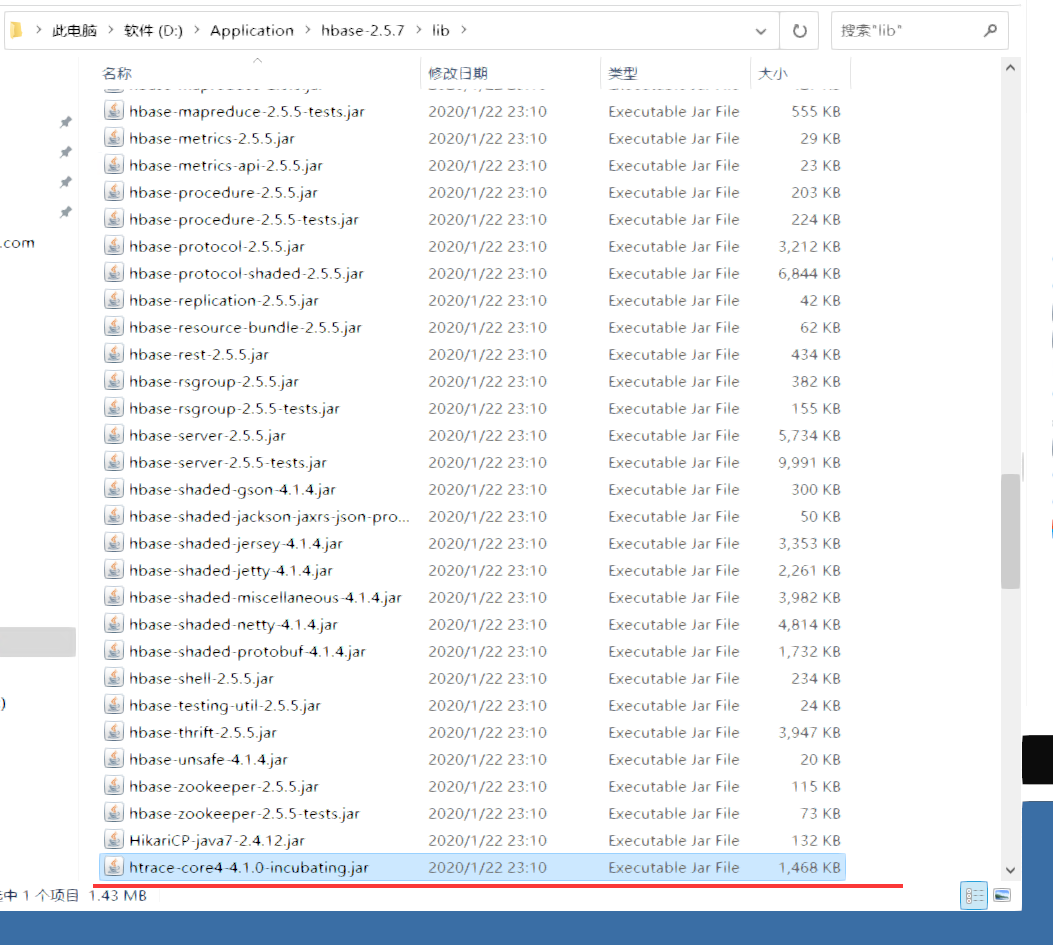

打开hbase 目录 hbase-2.5.7\lib\client-facing-thirdparty

将里面的所有jar包 复制一份 到上一级目录 hbase-2.5.7\lib

如果提示需要覆盖 直接覆盖就行

最后需要配置zookeeper

这时候基本上可以启动hbase了

2024-01-23 20:38:10,360 ERROR [main] regionserver.HRegionServer (HRegionServer.java:<init>(722)) - Failed construction RegionServer

java.lang.NoClassDefFoundError: org/apache/htrace/core/Tracer$Builder解决步骤:

1.从hbase/lib/client-facing-thirdparty中复制htrace-core4-4.1.0-incubating.jar 到hbase/lib下

2.再将hbase/lib目录下htrace-core4-4.1.0-incubating.jar 删除 (也可以不删)

3.重启

D:\Application\hbase-2.5.7\bin>start-hbase.cmd

Exception in thread "main" java.lang.NoClassDefFoundError: org/slf4j/LoggerFactory

at org.apache.hadoop.conf.Configuration.<clinit>(Configuration.java:185)

at org.apache.hadoop.hbase.util.HBaseConfTool.main(HBaseConfTool.java:36)

Caused by: java.lang.ClassNotFoundException: org.slf4j.LoggerFactory

at java.base/jdk.internal.loader.BuiltinClassLoader.loadClass(BuiltinClassLoader.java:641)

at java.base/jdk.internal.loader.ClassLoaders$AppClassLoader.loadClass(ClassLoaders.java:188)

at java.base/java.lang.ClassLoader.loadClass(ClassLoader.java:520)

... 2 more

ERROR: Could not determine the startup mode.重新下载hbase 解压 (大概率文件不完整导致的)

hadoop ResourceManager启动失败的日志

参考 [](https://blog.csdn.net/qq_43083688/article/details/112580539)